DeepSeek-V3: The Pinnacle of Open-Source AI

DeepSeek-V3 currently stands as the best open-source AI model, decisively outperforming competitors such as Llama 3.1 405B, Qwen, and Mistral in benchmarks. Remarkably, it is on par with leading closed-source models like OpenAI’s GPT-4o and Claude 3.5 Sonnet. Not only does it excel in performance, but it was also trained at an astonishingly low cost of $5.576 million, significantly less than its counterparts. For example, GPT-4 reportedly cost hundreds of millions of dollars to train, showcasing DeepSeek-V3’s efficiency. Furthermore, it offers unparalleled inference speed and can be hosted inexpensively, making it an ideal choice for organizations of all sizes.

1. Introduction

About Deepseek

DeepSeek, a Chinese artificial intelligence company founded in 2023, has made remarkable advancements within just one year of its inception. Established by Liang Wenfeng, the founder of the Chinese quantitative hedge fund High-Flyer, DeepSeek focuses on developing advanced AI models with a vision of achieving artificial general intelligence (AGI). In this short span, the company has launched multiple groundbreaking models, including DeepSeek-V3, which boasts 671 billion parameters and rivals industry leaders like GPT-4. DeepSeek’s rapid progress and commitment to open-sourcing its models have positioned it as a disruptive force in the global AI landscape.

Why DeepSeek-V3 Matters

DeepSeek-V3 is more than just an AI model; it’s a statement about the future of open-source AI. By addressing both performance and cost barriers, it has the potential to reshape AI adoption across industries.

2. Architectural Innovations

Mixture-of-Experts (MoE) Framework

At the heart of DeepSeek-V3 lies its Mixture-of-Experts (MoE) architecture. With 671 billion parameters, this model activates only 37 billion parameters per token. This selective activation ensures a balance between computational efficiency and model depth, making it one of the most advanced architectures in AI.

Multi-head Latent Attention (MLA)

The introduction of MLA optimizes attention mechanisms, allowing the model to infer faster while maintaining accuracy. This innovation ensures that even complex tasks are processed with remarkable speed and precision.

Auxiliary-Loss-Free Load Balancing

Traditional load balancing relies heavily on auxiliary losses, which can increase training complexity. DeepSeek-V3’s novel approach eliminates this dependency, streamlining operations and improving performance.

3. Training Methodology

Data Scale and Quality

DeepSeekV3 was trained on an extensive dataset comprising 14.8 trillion tokens, sourced from diverse, high-quality domains, including curated web content, academic papers, and proprietary datasets. The company prioritized filtering and preprocessing to ensure data quality, reducing noise and improving contextual relevance. DeepSeek leveraged partnerships and open-source contributions to access a wide variety of training data while adhering to ethical data usage practices.

FP8 Mixed Precision Training

Using FP8 mixed precision significantly enhanced the training efficiency of DeepSeek V3 by reducing computational overhead and memory consumption without compromising model accuracy. FP8 (8-bit floating point) mixed precision allowed for faster matrix computations and optimized GPU utilization, enabling the training to scale effectively across massive datasets and complex architectures. This precision format also minimized energy costs and reduced hardware strain, contributing to the cost-efficient training of DeepSeek V3.

HAI-LLM Framework

The HAI-LLM Framework is a cutting-edge training system that combines hardware-aware strategies with algorithmic innovations, it ensures efficient and stable training across diverse environments. Key features include tailored hardware optimizations for NVIDIA H800 GPUs, support for FP8 mixed precision to reduce memory requirements, and advanced gradient stability techniques to prevent training interruptions. Additionally, its robust data pipeline minimizes bottlenecks, enabling seamless handling of the massive 14.8 trillion-token dataset used for DeepSeek-V3. This framework reduced training costs and ensured scalability and reliability, cementing its role in delivering state-of-the-art AI performance.

Post training distillation

DeepSeek-V3’s advanced reasoning capabilities are a result of a specialized post-training process. By distilling reasoning skills from its DeepSeek-R1 series models, the company has incorporated verification and reflection patterns into the V3 model. This enhances the model’s ability to perform complex reasoning tasks while maintaining precise control over output style and length. These post-training innovations ensure that DeepSeek-V3 not only excels in benchmarks but also delivers practical value in real-world applications requiring high-level reasoning.

4. Performance and Benchmarking

Unmatched Inference Speed

Generating 60 tokens per second, DeepSeek-V3 delivers three times the speed of its predecessor, making it an efficient choice for real-time applications.

Industry Benchmarks

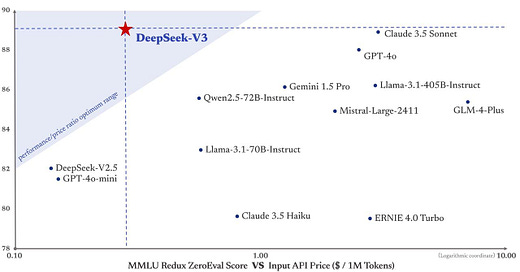

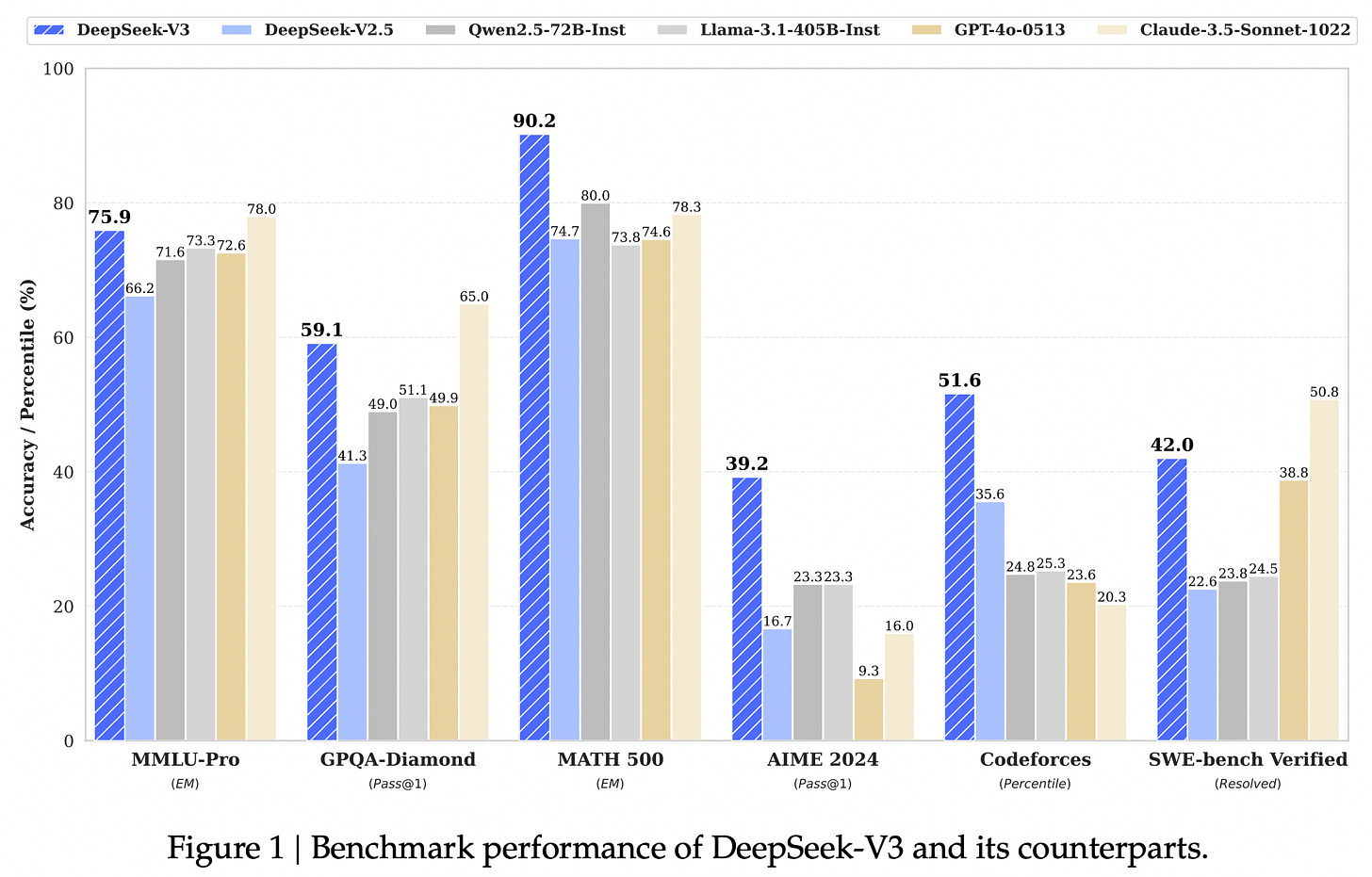

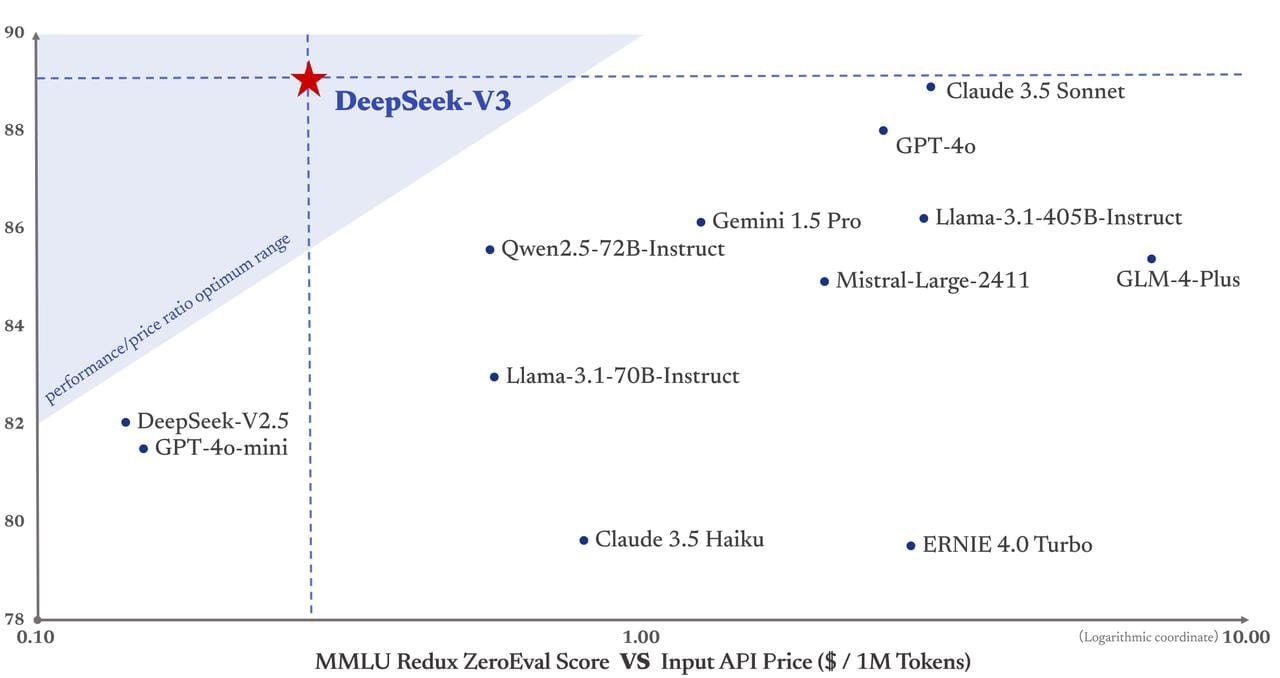

DeepSeek-V3 has redefined the benchmarks for open-source AI, outperforming all its open-source competitors such as Llama 3.1 405B, Qwen, and Mistral. Its exceptional performance places it on par with industry titans like OpenAI’s GPT-4o and Claude 3.5 Sonnet. Below, you can find a detailed comparison of benchmark performances that illustrate its dominance in the field.

5. Efficiency

Optimized Training Resources

DeepSeek V3, trained using 2.788 million H800 GPU hours, demonstrates remarkable efficiency compared to many leading large language models (LLMs). Meta’s Llama 3.1, for instance, required approximately 31 million GPU hours on H100-80GB GPUs, significantly outpacing DeepSeek in computational demands. Similarly, NVIDIA’s Nemotron-4 utilized 6,144 H100 GPUs over six months, processing a similar scale of data but at a far greater cost and resource intensity. Even OpenAI’s GPT-3, despite being an older model, required over 1 million GPU hours, with the newer GPT versions demanding exponentially more, though exact figures remain undisclosed. DeepSeek’s cost-effective training, achieved at $5.576 million, showcases its highly optimized processes, including FP8 mixed precision and the HAI-LLM framework, making it both resource-efficient and high-performing. While other models like DBRX and Mistral have notable achievements, they lack the demonstrated balance of efficiency and performance seen in DeepSeek V3. These comparisons highlight DeepSeek’s ability to achieve state-of-the-art performance with fewer resources, positioning it as a leader in advancing LLM development.

Competitive API Pricing and Hosting

Currently, DeepSeek is offering promotional pricing until February 8, 2025, with rates set at $0.1 per million input tokens (cache hits), $1 per million input tokens (cache misses), and $2 per million output tokens.

After the promotional period, the pricing will adjust to $0.5 per million input tokens (cache hits), $2 per million input tokens (cache misses), and $8 per million output tokens.

Compared to other large language model (LLM) APIs, DeepSeek V3's pricing is competitive. For instance, OpenAI's GPT-4o is priced at $2.5 per million input tokens and $10 per million output tokens, while Anthropic's Claude 3.5 Sonnet is offered at $3 per million input tokens and $15 per million output tokens.

6. Features of DeepSeek-V3

Advanced Reasoning and Deep Thinking

DeepSeek-V3 excels in complex reasoning tasks, thanks to its post-training enhancements that integrate verification and reflection techniques. This allows the model to solve intricate problems in mathematics, logic, and programming with exceptional precision. Whether you're debugging code or solving a logic puzzle, DeepSeek-V3 consistently delivers structured and reliable solutions, making it a strong contender against ChatGPT in technical and analytical domains.

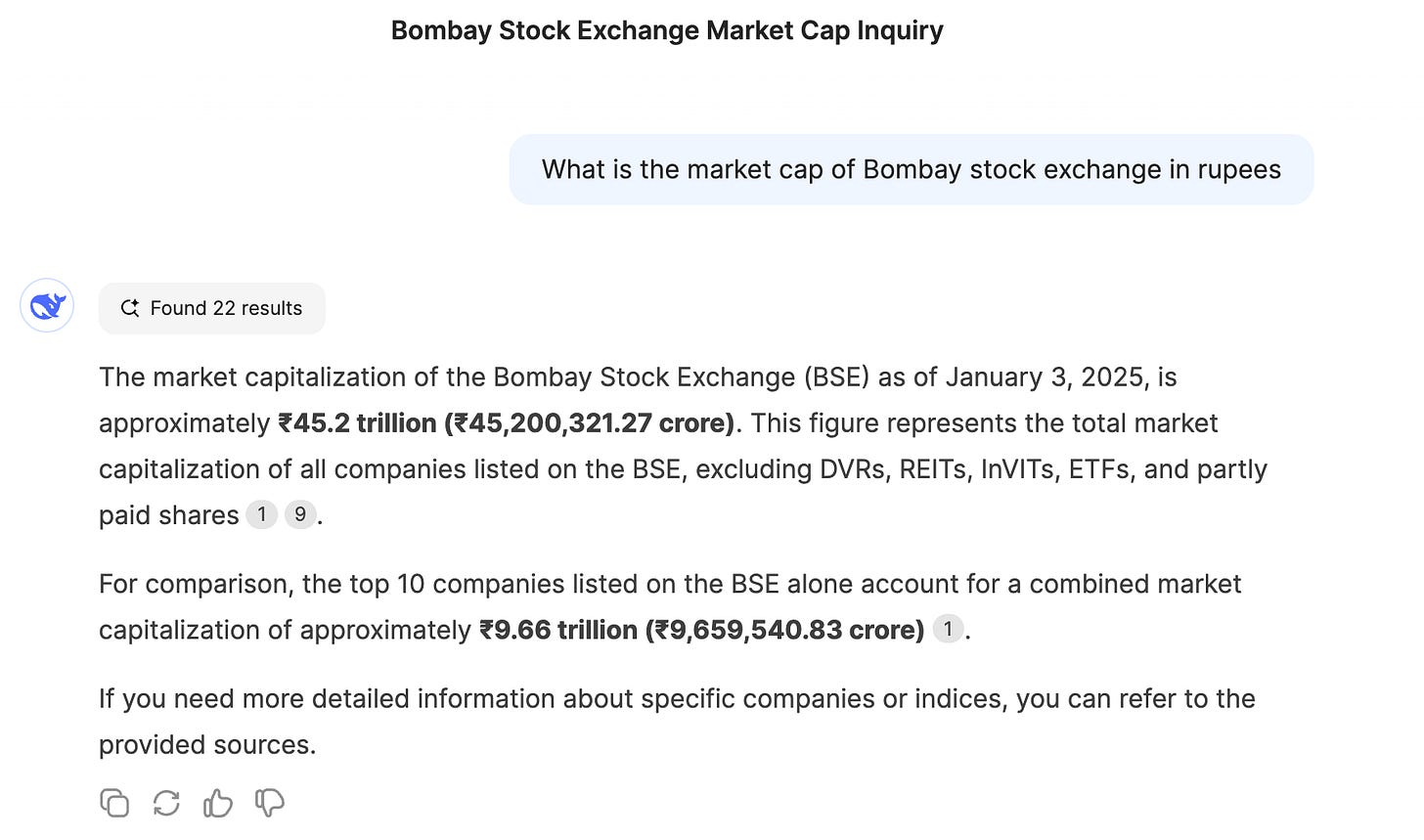

Search-Enhanced Responses

DeepSeek-V3’s built-in search capabilities provide real-time, context-aware answers by fetching and synthesizing information from external sources. Unlike static models, it can validate data, ensuring the accuracy of responses. This feature is particularly useful for fact-checking, generating up-to-date content, or tackling domain-specific queries, offering a functionality that extends beyond ChatGPT's standard outputs.

Multi-Modal Input Support

DeepSeek-V3 supports multi-modal inputs, enabling users to interact with the model using text, images, or diagrams. For example, you can upload a chart or a photo, and the model will analyze the visual data to extract meaningful insights or summarize patterns. While ChatGPT’s multi-modal capabilities are available in its Pro version, DeepSeek-V3 provides a more comprehensive approach to interpreting and responding to visual content.

128k Token Context Window

With a massive 128k token context window, DeepSeek-V3 can process lengthy and complex documents without losing coherence. This feature is ideal for tasks like summarizing books, analyzing contracts, or handling multi-turn conversations. While ChatGPT offers extended context windows in its higher-tier models, DeepSeek-V3 democratizes this capability with its open-source accessibility.

Coding and Data Analysis

DeepSeek V3 is purpose-built to excel in tasks requiring precision and intelligence, such as coding and data analysis. Leveraging its advanced pre-trained capabilities, developers can seamlessly debug complex code, generate optimized database queries, and interpret intricate algorithms with clarity and reliability.

7. Examples

Search Example

Creative Writing Example

Coding Example

Reasoning Example

8. Open-Source Commitment

DeepSeek-V3 exemplifies a balanced approach to open-source innovation by providing accessibility while ensuring responsible usage through its licensing arrangements. The model and its accompanying codebase are distributed under two distinct licenses:

Model License

The model is provided under DeepSeek's proprietary Model License, which permits use, reproduction, and distribution, including for commercial purposes, subject to specific terms and conditions. These terms include use-based restrictions to promote ethical and responsible AI deployment.

Code License

The accompanying codebase is released under the MIT License, a permissive open-source license that allows for extensive reuse with minimal restrictions, fostering broad adoption and adaptation by the developer community.

By combining these licensing strategies, DeepSeek-V3 ensures open access while addressing ethical considerations in AI development. This dual licensing model not only accelerates innovation but also empowers organizations to leverage state-of-the-art AI responsibly. True to its open-source ethos, DeepSeek-V3 fosters a spirit of collaboration, enabling developers and organizations to build on its robust foundation and drive advancements across industries.

9. Conclusion

DeepSeek-V3 stands out as a versatile and powerful tool, offering unmatched reasoning and mathematical capabilities that redefine expectations for open-source AI. Its advanced post-training enhancements make it excel in tasks requiring complex problem-solving and logic, outshining models like GPT-4o and rivaling closed-source leaders such as Claude 3.5 Sonnet. While Claude 3.5 Sonnet may hold an edge in creative writing and coding finesse, DeepSeek-V3’s strengths in reasoning and mathematics firmly establish its dominance in analytical and technical domains. Additionally, its open-weight nature allows organizations to host the model themselves, ensuring greater flexibility and control—a distinct advantage for developers building custom applications on top of LLMs. For those seeking a cost-effective, high-performance model capable of delivering state-of-the-art results, DeepSeek-V3 is an unparalleled choice.