The AI Stack: Understanding the Key Layers Powering the AI Ecosystem

A Breakdown of AI Infrastructure, Data, Models, Tooling, and Applications for Builders and Businesses

AI is transforming industries at an unprecedented pace, but to truly understand where value is created and how businesses differentiate, it's essential to break down the AI stack. While this might be obvious to some, having a clear framework helps founders, builders, and investors pinpoint opportunities, competition, and where real moats can be built.

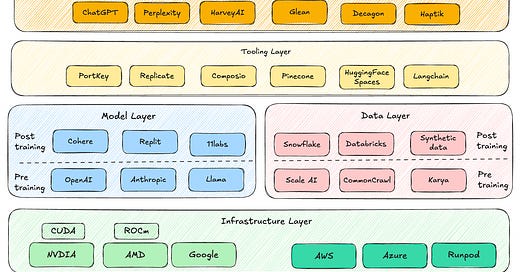

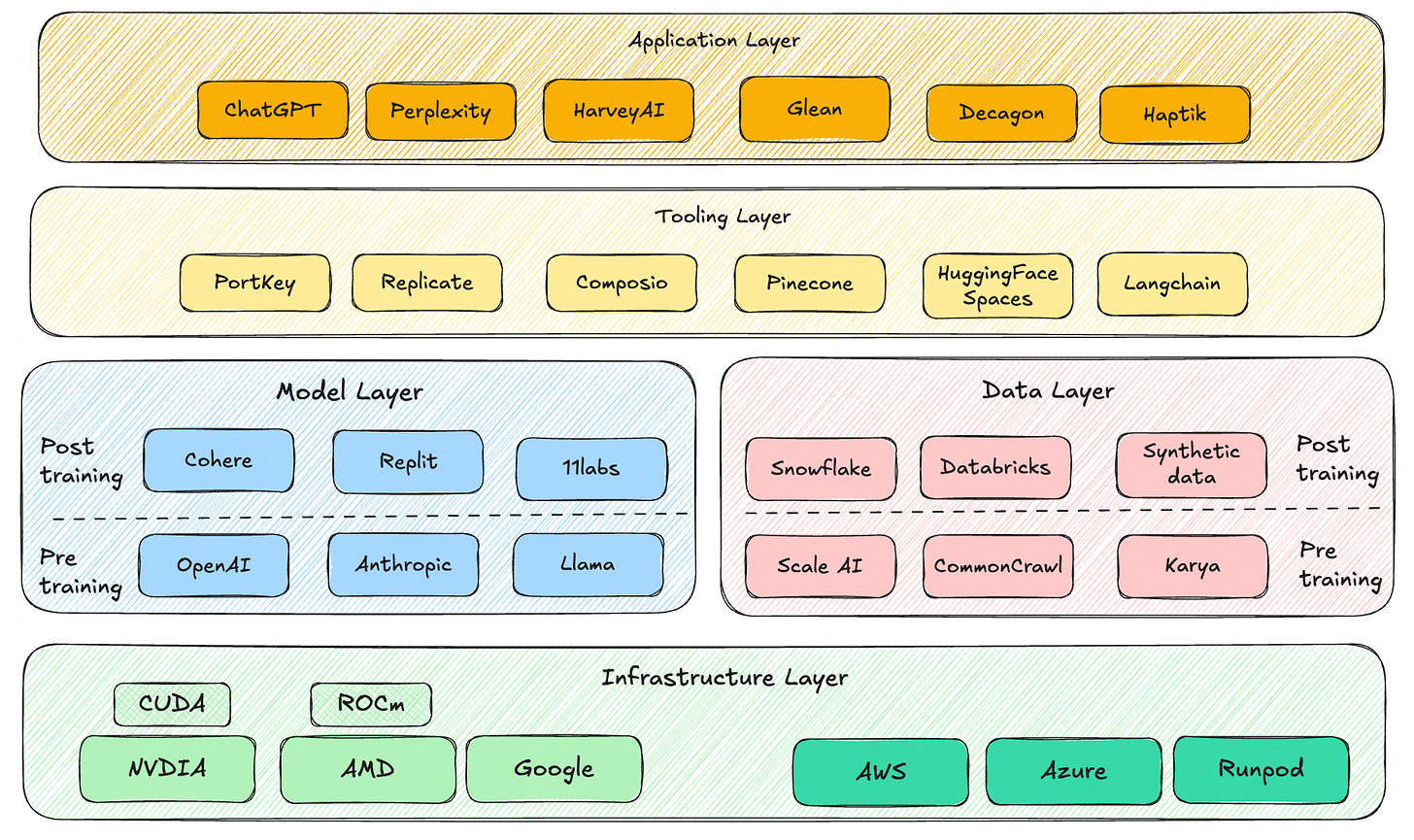

Here’s how I see the full AI stack, from the foundational infrastructure to the applications that deliver AI-powered experiences to users.

1. Infrastructure Layer (The Hardware & Compute Backbone)

At the base of the AI stack lies the infrastructure layer, which includes the hardware and low-level software required to run AI models. This consists of:

GPUs & AI Accelerators – NVIDIA, AMD, Intel, Google TPUs, AWS Trainium, etc.

Compute & Cloud Providers – AWS, Google Cloud, Azure, Oracle Cloud, etc.

Software Libraries – CUDA (for NVIDIA GPUs), ROCm (for AMD), oneDNN (for Intel), etc.

Without this layer, no AI models can be trained or deployed efficiently. The companies operating here are capital-intensive but provide the critical foundation for the AI ecosystem.

(Note: Electricity, cooling, and data center infrastructure also play a crucial role in this layer, but we have not gone deep into that here.)

2. Model Layer

The model layer consists of two key types of AI models:

Foundation Models (Pre-Training) – These are large-scale models trained on vast datasets and used as the base for further adaptation. Companies building these include OpenAI (GPT-4, DALL·E), Anthropic (Claude), Google (Gemini), Mistral, Meta (Llama), etc.

Post-Trained & Fine-Tuned Models – These are models that have been adapted using proprietary or domain-specific data for targeted use cases. Cohere, Hugging Face, Stability AI, Replit (code models), and others focusing on specific use cases like legal, medical, or scientific AI.

This distinction is crucial, as foundation models provide a general base, while post-trained models refine them for real-world applications.

The foundation model layer is where most of the cutting-edge AI research happens, and while only a few companies have the resources to train these models from scratch, many build upon them.

3. Data Layer

Like the model layer, the data layer can be divided into two parts: data for foundation models (pre-training) and data for post-training/inferencing. Each of these requires different types of datasets:

Data for Foundation Models (Pre-Training) – Companies like Scale AI, Common Crawl, Karya, and Labelbox specialize in data annotation and labeling for large-scale pre-training purposes.

Data for Post-Training & Inferencing – Companies like Snowflake, Databricks (DBRX), and synthetic data providers focus on fine-tuning and real-time inferencing needs.

This layer is crucial because high-quality, diverse, and well-labeled data directly impacts model performance and reliability.

4. Tooling Layer

Once foundation models exist, they need to be adapted, fine-tuned, and deployed efficiently. The tooling layer includes:

MLOps & AI Deployment – PortKey, Weights & Biases, MLflow, etc

Vector Databases & Retrieval-Augmented Generation (RAG) – Pinecone, Weaviate, ChromaDB, etc.

AI API Marketplaces – Hugging Face Spaces, Replicate, AssemblyAI, etc.

Agentic & Workflow Tools – Composio, LangChain, LlamaIndex and orchestration frameworks that make AI more useful in workflows.

This layer is about enabling businesses to use AI effectively—ensuring reliability, monitoring, and deployment at scale.

5. Application Layer

At the top of the stack sits the application layer, where AI is delivered to end users. These applications integrate models, data, and infrastructure to solve real-world problems. Examples include:

AI Assistants & Chatbots – ChatGPT, Perplexity, Jasper, Copy.ai, etc.

AI in Vertical Markets – Legal (Harvey AI), Healthcare (Qure.ai), etc.

A key aspect of succeeding in this layer is seamless integration with other systems and workflows. AI applications need to be embedded into existing software stacks, enterprise systems, or consumer applications to maximize utility and adoption. Companies that effectively integrate AI into industry-specific workflows or existing SaaS products have a better chance of owning this layer and delivering differentiated value.

Why This Matters

The AI ecosystem is rapidly evolving, and companies that fail to clearly define their place within the stack risk being outpaced by more strategic players. Whether you're a founder, investor, or engineer, understanding the layers you operate in is essential for long-term success. Here’s why this matters:

Identify Your Unique Edge – Are you competing in a commoditized layer, or are you creating unique value? Differentiation is key to building a sustainable business.

Anticipate Market Shifts – AI is not static. New technologies, open-source advancements, and regulatory changes constantly reshape the landscape. Knowing your layer helps you stay ahead.

Know Your True Competitors – Your biggest challenge might not be another startup but a hyperscaler, a foundation model provider, or an incumbent integrating AI more effectively.

Build Defensible Moats – True AI moats come from ownership—of data, distribution, or deep integrations within enterprise workflows. Relying too much on other layers can leave you vulnerable.

Different companies operate at different layers of the AI stack. For example, an AI application company might use proprietary customer data to fine-tune models but still rely on foundation models from OpenAI or Anthropic and infrastructure from AWS. Clearly defining which layers you are leveraging vs. where you are creating unique differentiation helps businesses make strategic decisions on partnerships, investments, and long-term vision.

This is just the beginning. In a future post, I’ll explore AI moats—how companies can build lasting defensibility in an industry that is evolving faster than ever.

Thanks to Pratyush and Aakrit for reviewing and providing valuable feedback on this post