Understanding Agentic AI Architecture

A Comprehensive Guide for Beginners to Experts

Introduction

Agentic AI represents a fascinating and complex frontier in the development of artificial intelligence. Unlike traditional machine learning models that passively receive inputs and produce outputs, agentic AI involves entities, called agents, that can make decisions, interact with their environment, and achieve specific goals. These agents utilize components such as reasoning, memory, and planning to perform tasks autonomously, even collaborating with other agents to solve larger problems. This paper provides a detailed exploration of agentic AI, covering concepts from beginner to expert level, while offering practical examples and applications to illustrate these concepts effectively.

What is Agentic AI

Agentic AI refers to AI systems that demonstrate autonomous behavior, actively working to achieve goals with minimal direct human intervention. An agentic AI system is capable of understanding its environment, reasoning about available options, planning its actions, executing tasks, and learning from its experiences. The term "agentic" suggests that these systems can take initiative, make decisions, and even communicate with other agents to achieve complex tasks.

Examples

Customer Support Agents: Customer support agents use agentic AI to interact with customers, answer queries, and escalate issues when needed. These agents can provide round-the-clock support, adapt to the customer's needs, and learn from previous interactions to improve their responses.

Marketing Agents: Marketing agents are designed to autonomously manage marketing campaigns, including segmenting audiences, scheduling posts, and optimizing ads based on real-time performance data. They help businesses achieve better outreach with minimal human intervention.

Coding Agent: Coding agents are designed to autonomously generate code, scripts, or perform debugging tasks. These agents are useful for automating repetitive coding tasks, generating boilerplate code, or creating custom functions as needed. They can operate within an integrated development environment (IDE) to execute specific commands, optimizing the software development lifecycle.

Virtual Assistants: Personal assistants like Alexa or Google Assistant use agentic AI to respond to user requests, set reminders, make recommendations, and control smart devices, adapting to users' preferences.

Financial Trading Bots: Automated trading agents are capable of making trading decisions in real time, based on market conditions, predefined rules, and evolving strategies.

Agents and System 1 vs. System 2 Thinking

In the book Thinking, Fast and Slow by Daniel Kahneman, human cognition is described in terms of two systems: System 1 and System 2. System 1 thinking is fast, intuitive, and automatic, while System 2 thinking is slower, more deliberate, and logical. This concept can be applied to understanding the operation of agentic AI.

System 1 Agents: These agents are designed to make rapid decisions and respond to simple, routine tasks. They operate in a way similar to System 1 thinking—quickly and efficiently. For example, a customer support agent that gives instant responses based on predefined rules or patterns is akin to System 1 thinking, where speed and immediacy are prioritized.

System 2 Agents: These agents handle more complex tasks requiring careful reasoning, planning, and evaluation. They embody System 2 thinking, which involves a deeper, more thoughtful approach to decision-making. Agents like the Planning agent are meant to exhibit System 2 behavior, where multiple layers of analysis, planning, and evaluation come into play before a decision is made.

Agentic AI often blends these two modes of operation—rapid, instinctual responses for well-defined tasks (System 1) and more comprehensive, deliberate actions for complex decision-making (System 2). Understanding this distinction helps to design agents that can leverage both approaches effectively, depending on the nature of the task at hand.

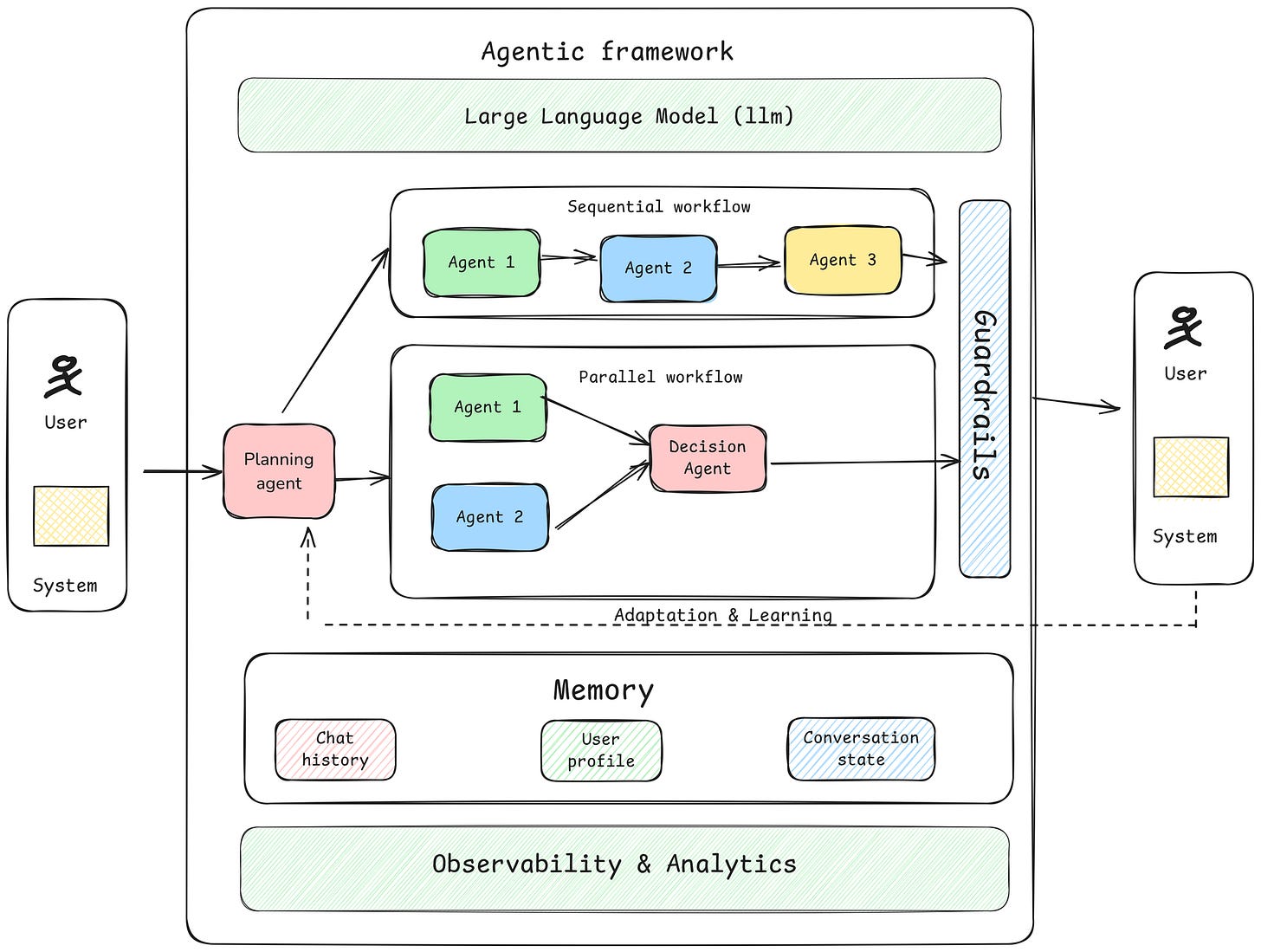

Agentic AI Architecture and Components

Above is a diagram depicting the components of an agentic framework.

Triggering Mechanisms

Any agentic system can be initiated by a user interacting with it or by another system triggering an action, either through a webhook or a frequency timer.

LLM

Every agentic framework is dependent on a language model (LLM). Each component can access the same or different LLM to complete its goal, providing flexibility and scalability in handling various tasks.

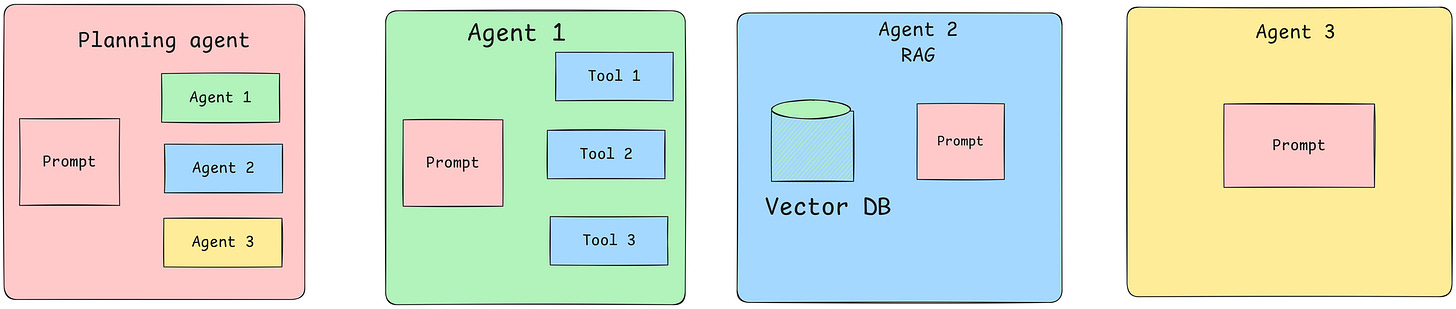

Planning Agent

The planning agent serves as the orchestration component, which includes reasoning, planning, and task decomposition. This agent knows all other agents and, through proper planning, reasoning, and task decomposition, decides which agents to execute and in what order. LLMs with reasoning capabilities (such as OpenAI’s o1-preview) are best suited for such agents but should be used carefully to balance other considerations of an agentic system.

Agents

Agents encapsulate a set of instructions and tools to achieve a given task.:

Prompts: Commands given to a language model along with the tools it has access to.

Tools: Execution blocks that perform actions, such as simple code blocks, API calls, or integrations with other systems.

Environments: Tools may also have environments associated with them to execute specific tasks, such as IDEs or general computer use.

Complex Agents: Agents can also be entire architectures, such as Retrieval-Augmented Generation (RAG), which include embeddings and vector databases.

Memory: Memory in agentic AI allows agents to store information and recall it in future interactions. Memory is available to all components at all times and can include different types:

User Profile: User-specific information that helps agents create personalized experiences.

Chat History: The history of conversations, allowing agents to pull context from past interactions.

Chat State: Tracking the workflows that have been executed to avoid duplicating tasks.

Guardrails

Guardrails are safety mechanisms that prevent harmful behaviors while ensuring robustness in handling unforeseen inputs or scenarios. Rules such as “Ensure there is no mention of competitors in the reply”, “Avoid discussing religion or politics” are a few such examples that should exist at a framework level. These constraints are crucial for deploying agents in dynamic environments, providing default safety checks that can be edited if needed.

Agent Observability

Observability allows developers and users to understand what an agent is doing and why. Providing transparency in agent behavior helps diagnose issues, optimize performance, and ensure that the agent's decisions are aligned with the desired outcomes.

Adaptation and Learning

Adaptation involves an agent's ability to modify its behavior based on feedback from the environment. This includes reinforcement learning or other adaptive techniques that enable agents to optimize their decision-making over time. For example, a marketing agent may adapt its strategy based on changing customer preferences.

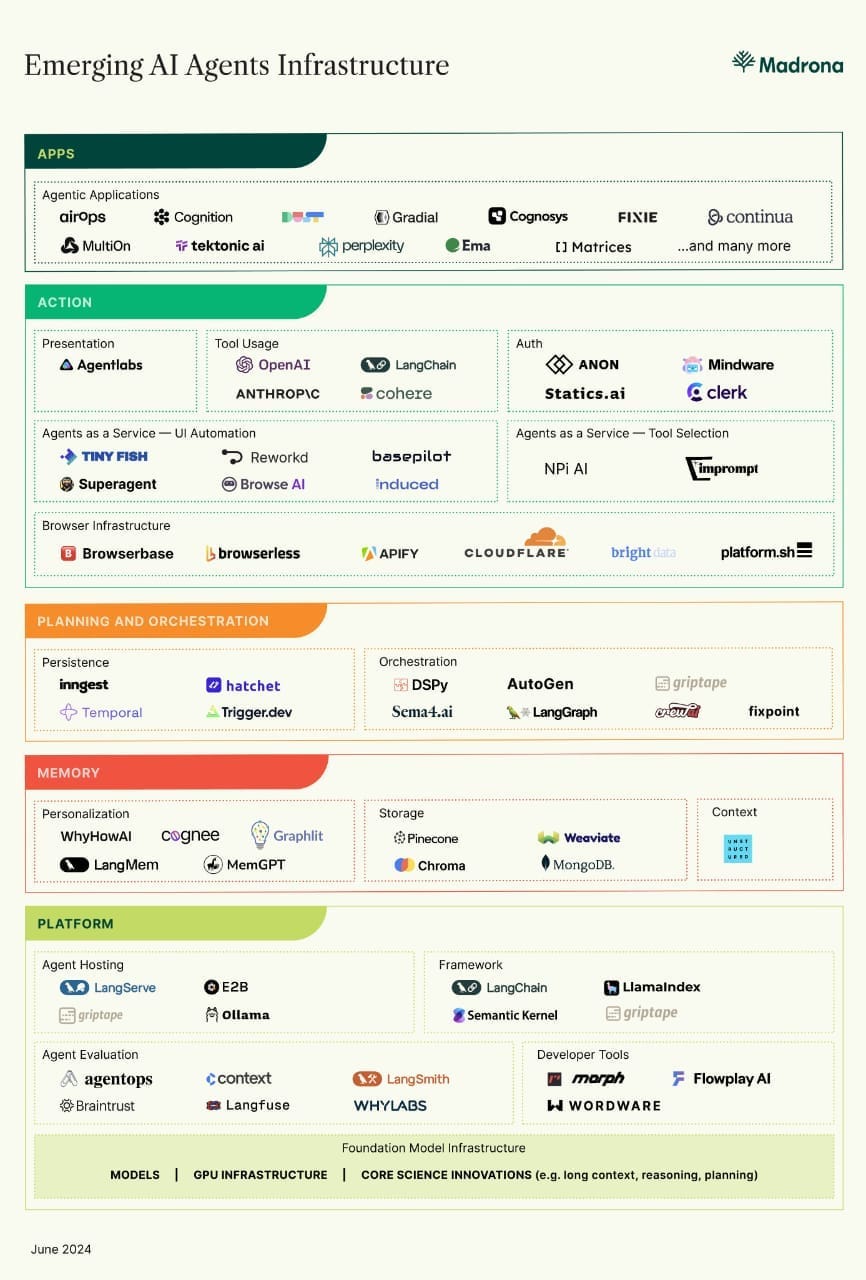

AI Agents Ecosystem

The agent AI infrastructure has evolved tremendously over the last year and is expected to continue evolving rapidly. This growth has brought many new tools and components that facilitate building agentic AI systems.

The diagram provided by Madrona showcases the different parts of the ecosystem, including components such as agent hosting, orchestration, memory, platform tools, and more.

Pros

Diverse Tooling: There are numerous good tools available for every part of building agentic AI. This diversity allows developers to pick specialized tools for their specific requirements.

Designed for simplicity: Many of these tools are designed with user-friendly interfaces or straightforward APIs, making it easy to get started and develop prototypes quickly in each area.

Cons

Fragmented Ecosystem: The large number of specialized tools results in a highly fragmented ecosystem, which requires a lot of effort to stitch together. Developers often spend more time integrating and managing multiple tools than on core development.

Integration Complexity: Ensuring compatibility and efficient communication between different tools can be complex and time-consuming, adding significant overhead to projects.

While this diversity in tooling enables a high degree of customization and specialization, it also presents a significant challenge for developers, who must figure out how to integrate them seamlessly into their frameworks.

This fragmentation often makes development cumbersome and time-consuming, especially as developers have to manage multiple tools that may or may not work well together. As the ecosystem continues to grow, consolidation into unified frameworks or more comprehensive tools will be key to simplifying the development process. Such consolidation would make it easier for developers to implement agentic AI systems without constantly dealing with integration issues and compatibility challenges.

Ultimately, while the pace of evolution in agentic AI infrastructure is encouraging, the need for cohesive and consolidated tools is crucial to ensuring that development remains accessible, efficient, and scalable for a wide range of use cases.

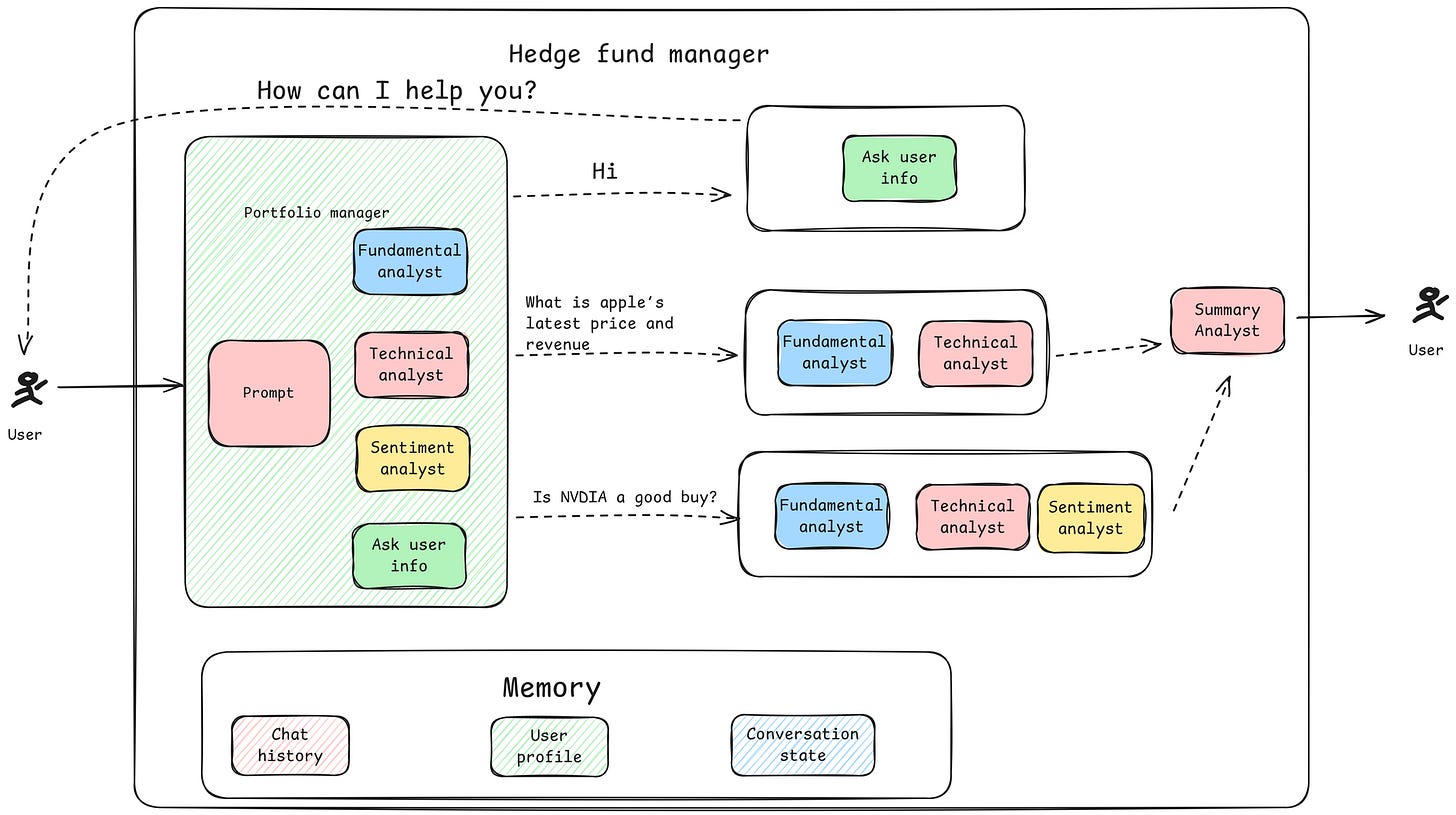

Example: Hedge Fund Agent

To evaluate the above frameworks, we use an example of a hedge fund agent that does different analyses to give a summary and decision about a public company stock

The above is the architecture of a hedge fund team of analysts **

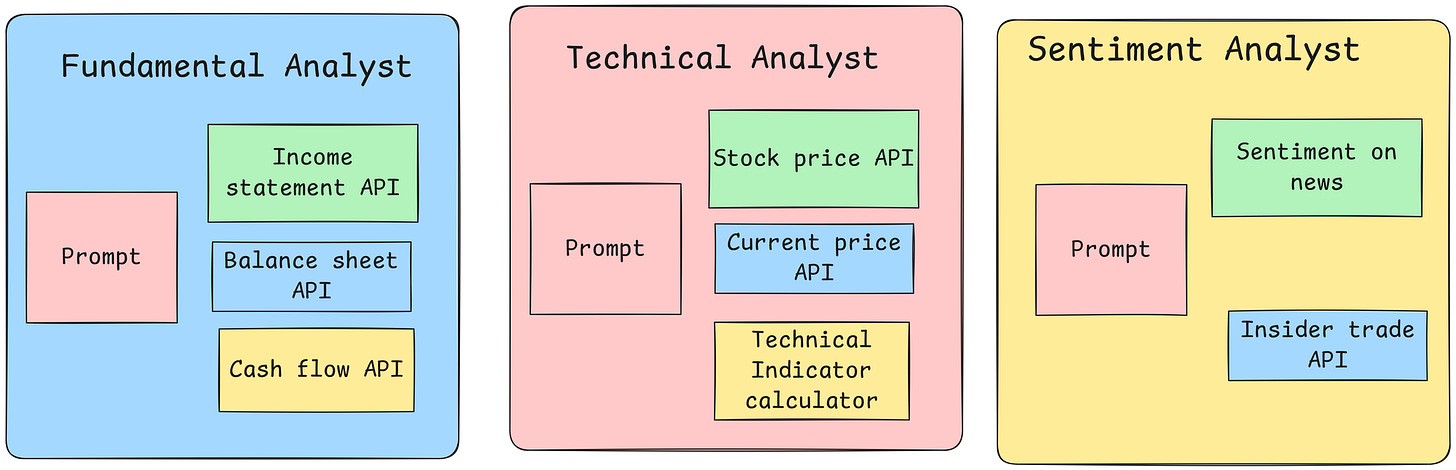

Portfolio Manager: Acts as a planning agent, orchestrating the overall decision-making process by deciding which agents to call and in what order.

Fundamental Analyst: Performs fundamental analysis of a stock, evaluating financial statements, revenue, profitability, and other indicators.

Technical Analyst: Conducts technical analysis using historical market data, price trends, and chart patterns.

Sentiment Analyst: Focuses on news articles, social media, and other public sentiment sources to gauge market emotions.

Summary Analyst: Takes inputs from other analysts and decides whether the stock should be a Buy, Hold, or Sell.

User-Asking Analyst: Reaches out to the user when additional information is required to proceed with further analysis.

Frameworks

Various frameworks exist to make it easy to build agents. Below is a quick evaluation of all the frameworks having implemented them in most of the frameworks below:

Framework Notes

Most of the frameworks have most of the functionality and differ in implementation detail rather than functionality.

Langchain: Easy to get started but hard to build complex flows. Best used alongside LangGraph.

LangGraph: Along with Langchain, Langgraph is a very powerful tool. Takes a little getting used to, and visualizing the graph is hard, making it challenging for someone to understand the flow without deep exploration.

CrewAI: Overall a great framework that allows all functionality. Also has a Saas platform to make it easier to use. Allows hierarchical flows, but more complex flows are still on the roadmap.

Swarm: Primarily an educational framework and not yet ready for production. It has most functionalities, but combining them to build complex flows seems limited.

Microsoft AutoGen: To be evaluated soon.

Observations

No one has a way to build guardrails on a framework level. There is a framework by NVIDIA called NeMo Guardrails that can allow plug-in programmable guardrails but must be plugged in separately.

Memory for most is done using states and context variables. All of this needs to be managed manually

Planning is possible via regular agents, but state handling needs to be done manually

Dynamic workflows are done using handoffs, but langgraph and crewAI make it easier to build graphs.

Evaluation of Agents

Evaluating agentic AI is crucial for ensuring reliability, performance, and safety. Due to the need for fast iteration and the subjective nature of evaluating agents, there is often insufficient focus on testing and evaluation. Below, we discuss insights and best practices for effective testing of agentic AI systems.

Unit Evaluation

Ideally, each agent should have its own evaluations, akin to unit testing in software engineering. Unit evaluation ensures that individual agents behave as expected, meeting their functional requirements. Agents should be tested across different scenarios to validate their reasoning, planning, and execution accuracy. It is beneficial to keep the output structured for each agent, as structured output makes validation easier.

Integration Evaluation

The entire system should also undergo evaluations, akin to integration testing. Integration evaluation examines how well agents work together as a complete system, verifying that the interactions between agents lead to correct outcomes. This type of testing is crucial for identifying issues that arise from communication failures or unexpected dependencies among agents.

Runtime Verification

Runtime verification involves assessing the agents' outputs in real time to improve their adaptation and learning capabilities. There are 2 ways to do this, by having a human in the loop which can only be done on small amounts of data or by using larger models. Using larger models as runtime verifiers can help ensure that agents make decisions aligned with their intended goals. However, this can be computationally expensive. To mitigate costs, batched runtime verification or selective runtime verification (e.g., based on user feedback or critical events) can be employed. These verification methods help maintain system quality while adapting to new data over time.

Red Teaming

Red teaming is a critical component of evaluating agentic frameworks, especially for identifying vulnerabilities and ensuring robustness. This type of evaluation involves simulating adversarial conditions to determine how well the system can handle unexpected or potentially harmful inputs.

Red team testing helps expose weaknesses in agents' reasoning, memory handling, and interaction strategies. It is essential for understanding how an agent may fail under adversarial conditions and for building better safety measures. Incorporating red team testing into the evaluation process ensures that agents are resilient to attacks and can operate safely in diverse environments.

Best Practices

Structured Outputs: Ensure each agent's output is well-structured. Structured output makes it easier to validate correctness and promptly identify issues.

Testing at Scale: Use larger language models to test final outcomes to ensure scalability. Larger models can simulate a variety of user behaviors, which helps stress-test the system effectively.

Iterative Evaluation: Agents should be evaluated iteratively, allowing developers to identify weaknesses early and make improvements swiftly. Each iteration helps refine agents and contributes to more stable outcomes over time.

By incorporating these testing practices, developers can enhance the reliability, adaptability, and robustness of agentic AI systems.

Considerations in Developing Agentic AI

Despite the potential, agentic AI systems face several limitations that restrict their applicability.

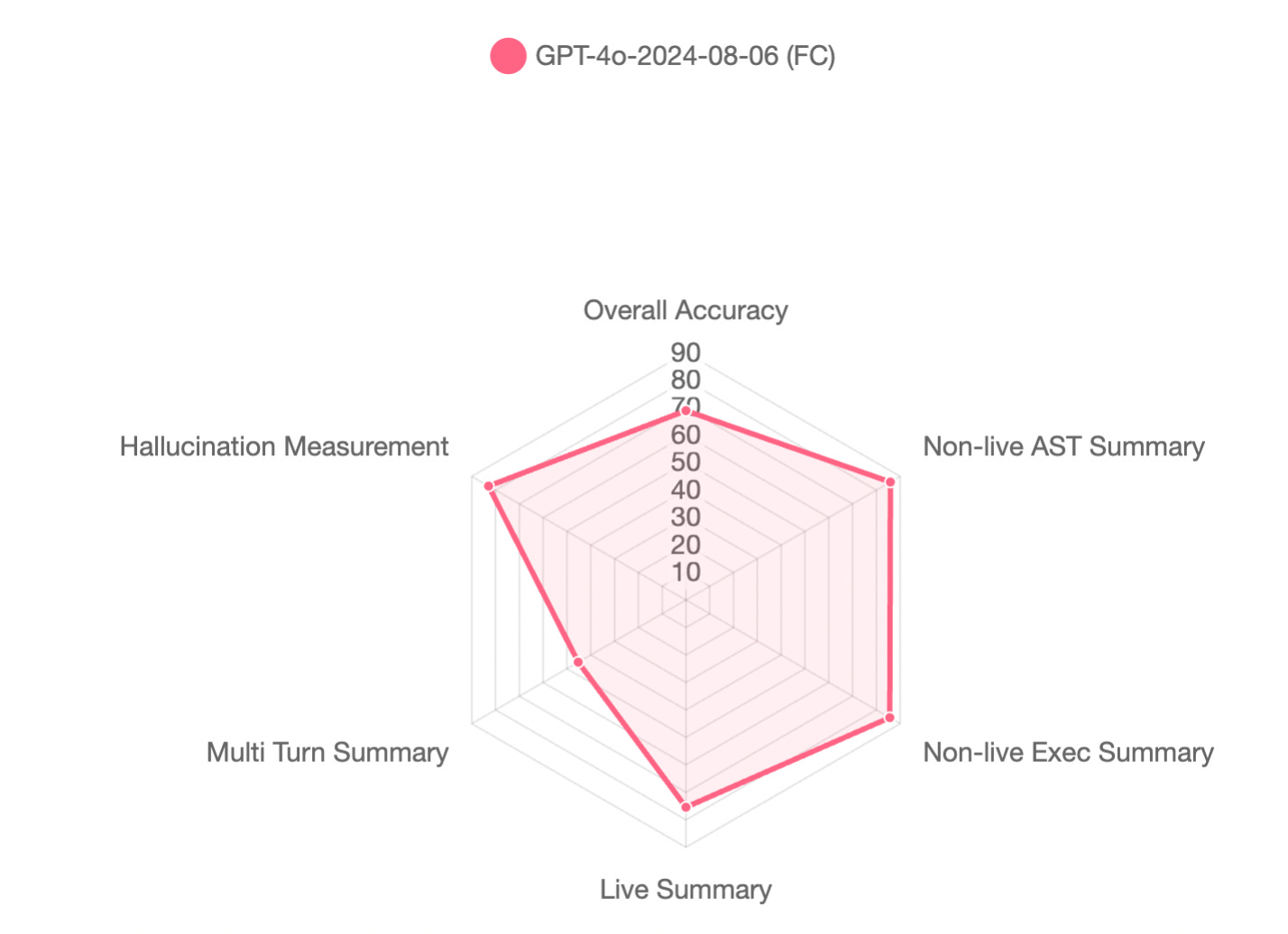

Accuracy of function calling

While LLMs can determine which tools to call, the accuracy of these decisions is still not great. According to the UC Berkeley Function-Calling Leaderboard, the best-in-class LLM has an accuracy of 68.9%, with a high measure of hallucinations. This limitation makes LLMs less reliable for high-risk use cases.

Best Practices for Improving Accuracy

Limit the Tools: Limit the number of tools to 4-5 per prompt. Beyond this, hallucinations increase, and accuracy decreases significantly.

Specific Prompts with Examples: Make the prompt very specific and try to provide examples of when to call which tool. This can help the LLM better understand the context, reducing errors and improving decision accuracy.

Good Evaluations for Tool Calling: Having thorough evaluations for tool calling is essential, as it helps measure and improve accuracy. This is achievable since the output can be structured, making it easier to validate if the correct tools were used effectively.

Cost

Running agentic AI can be expensive, especially given the number of language model (LLM) calls and the involvement of multiple agents. Managing memory, reasoning, and tool usage adds to computational overhead, making the operation costly. However, there are several strategies that can help mitigate these costs:

Pricing Dynamics: while agentic AI might be expensive today, the pricing trend is expected to decrease significantly over time (10x every year). Advances in model optimization and increasing competition among providers are leading to lower costs, making agentic AI more feasible in the near future.

LLM Selection: Each agent can use a different type of LLM that is best suited for its purpose. For instance, a planning agent may require a more powerful LLM capable of complex reasoning, while simpler agents can rely on lightweight LLMs that are less expensive. This selective usage can help control costs while maintaining overall system efficiency.

Hybrid Models: Utilizing a combination of LLMs and smaller, specialized models (such as rule-based systems) for tasks that do not require complex language understanding can also help reduce the reliance on costly LLM calls. Agents can offload simpler tasks to non-LLM models, thereby reducing the frequency of expensive operations.

Optimized Token Usage: Using tokens and prompts wisely can significantly reduce costs. For example, sending summaries or only the relevant portions of a code base can help reduce the token count, thereby minimizing LLM usage expenses.

Batch Processing and Shared Resources: Agents can be designed to share resources when possible. For instance, memory and intermediate results can be cached and reused by multiple agents, reducing redundant computations and LLM calls. This type of optimization helps cut down on both computational and financial costs.

Latency

Agentic AI often involves multi-step reasoning and interactions, which can introduce significant latency, especially when agents communicate or make calls to external tools. Below are some best practices for reducing latency:

Streaming Last Layer: For user-facing agents, making the last layer of the LLM streaming can significantly reduce the perceived latency. Streaming the output allows the system to provide tokens as they are generated, creating a faster response time for the end-user and improving the overall user experience.

Prompt Optimization: Prompts and results can often be identical across different users. To reduce latency, prompt caching can be employed to reuse previously computed results whenever possible. By caching common prompts, agents can bypass unnecessary recomputation, delivering faster responses.

Specialized LLMs: Not every agent requires the use of a large, complex LLM. By utilizing simpler LLMs for straightforward tasks and reserving the more resource-intensive models for complex reasoning, latency can be effectively minimized.

Parallel Execution: When possible, agents should run in parallel rather than sequentially. For example, a sentiment analysis and a technical analysis could be conducted simultaneously, reducing the total processing time for a task.

Efficient Runtime Strategies: Using batched or selective runtime verification can also reduce latency. Instead of verifying every output, only critical outputs or random samples may be checked, saving processing time and resources.

Alignment

Ensuring that agents are aligned with human values and objectives is an ongoing challenge in the development of agentic AI systems. Misaligned agents may make decisions that, while logical from their perspective, could be harmful or undesirable for users. Below, we explore best practices and approaches for improving alignment:

Fine-Tuning for Task-Specific Goals: Fine-tuning language models to specific tasks helps improve the alignment of agents with desired outcomes. By training on task-specific data, agents can better understand and meet the expectations of particular applications, ensuring their behavior is more predictable and aligned with user goals.

Reinforcement Learning from Human Feedback (RLHF): Reinforcement Learning from Human Feedback can be used to fine-tune agents based on user preferences and values. RLHF enables agents to iteratively improve their behavior by learning from user feedback, making them more attuned to nuanced requirements and avoiding undesired behaviors.

Consistency Checks: Regular consistency checks should be performed to ensure agents are making decisions in line with expected behavior. Consistency checks can include comparing agent outputs against a set of pre-defined acceptable responses, which helps identify misalignment early on.

Explainability for Better Alignment: Agents that can explain their reasoning are easier to align with human expectations. Explainable AI enables users to understand why an agent made a particular decision, providing transparency and making it easier to detect when an agent is deviating from its intended purpose.

Human-in-the-Loop Oversight: For critical applications, incorporating human-in-the-loop oversight is crucial. This allows for real-time intervention when agents make decisions that deviate from intended goals, ensuring safety and alignment with human values. Human oversight is especially important in high-risk scenarios where autonomous decision-making could have significant consequences.

Conclusion

In this comprehensive guide, we have explored the fundamental concepts and components of agentic AI, including their architecture, various examples, and frameworks. Agentic AI represents a unique approach to building autonomous systems capable of reasoning, planning, and adapting to achieve specific goals. We discussed how agents can operate like System 1 and System 2 thinking, blending fast, intuitive actions with deliberate and complex decision-making processes. Furthermore, we analyzed different frameworks that enable the implementation of agentic systems and shared insights on best practices for testing and evaluation.

While there are successful case studies, such as Amdocs using Nvidia NIM and Wiley working with Salesforce, challenges remain in making agents fully autonomous. Trust and scalable runtime verifiability are still significant obstacles, as is the question of how best to architect agents to achieve optimal results. Developing robust frameworks can play a key role in overcoming these challenges, and ongoing improvements in testing, including runtime verification and red team testing, will help ensure agents perform reliably and safely.

Stay updated with the latest insights on Agentic AI. Subscribe to our newsletter below.

References and Links

** Taken from @virattt ‘s github repository of an AI hedge fund team. Can be found here.

https://github.com/NVIDIA/NeMo-Guardrails?tab=readme-ov-file

https://www.madrona.com/the-rise-of-ai-agent-infrastructure/